Cargo Space devlog #4 - Playing sound effects in a rollback world

I know there isn't supposed to be any sound in space, but I decided I didn't really care :) So I went ahead and added some sound effects to Cargo Space anyway... In this post, I describe the challenges with adding sound to a game with rollback-based netcode, and how I ended up solving them. I present my solution in detail so you could easily adapt it to your own rollback-based Bevy game.

The problem

As I started trying to improve the platforming feel, I thought I'd add some simple sound effects for when the player jumps and lands.

Sounds simple enough, right? Well, it turns out rollback networking adds some complexity to it...

If everything is perfectly predicted and nothing ever rolls back, then sure, just play the sound effect when something happens in the rollback world and it would work out nicely... i.e:

// in character_jump rollback system:

if state.can_jump() && input.just_pressed(Action::Jump) {

let v0 = f32::sqrt(2. * *height * gravity);

vel.0.y += v0;

// in the case of rollback, the sound could be played multiple times overlayed

audio.play(sound_assets.jump.clone());

}

However, rollbacks will happen. My configured (intentional) input latency is currently 2 frames, meaning any connection with a ping higher than $$\frac{2}{60Hz} \approx 33ms$$ would have rollbacks any time a player press a button.

The main issue with the naive approach, is that whenever rollbacks happen, any sound effects happening within the interval and now would be played again, playing on top of the already playing sound effects, just slightly offset. This is obviously not what we want.

Before I present my solution, lets look at a few other scenarios:

Scenario A - Late events

- We predict that the other player will keep doing nothing

- In the meantime, the other player presses the jump button

- We receive the input for the jump "too late", after the configured ggrs session input delay. bevy_ggrs will now roll back to before the first frame we mis-predicted, and simulate enough frames to catch up again.

In this case, the naive approach would probably have been ok, the sound would just be played a few frames too late, and may not match the game state perfectly.

Scenario B - Incorrectly predicted/aborted events

- The other player is running towards a blazing fire, full speed.

- We predict they will keep holding the run button, and start playing a burn-and-die sound on our end

- In the meantime, the other player actually pressed the opposite direction, last minute, barely avoiding the fire. So we have to roll back, and re-simulate the game state as though the player never died.

With the naive approach, the burn-and-die sound would have already started playing, and will just keep playing, even though nobody actually died, leaving our players confused. It would be better to either stop the sound effect abruptly or perhaps quickly fade out the burn-and-die sound, so it causes minimal confusion.

What you want to do in this case probably depends on the nature of the actual sound effect.

Scenario C - Wrongly timed events

Consider the scenario above, but the other player's timing is less fortunate, and they try to stop, slowing down, but still burns and dies in the fire. In both the predicted and the actual state, the player dies. We will just have started playing the sound effect slightly too early. In this case, depending on the timing and nature of the sound effects, it's probably best to just keep playing the sound effect, and ignore the time offset.

Finding a proper solution

To sum, an ideal solution would:

- (obviously) Not play the same sound effect multiple times whenever rollbacks happen

- Still play the sound effect when input arrives late (Scenario A)

- On time-critical sound effects, seek to the correct place in the sound file, both on lost events (Scenario A) and on wrongly timed events (Scenario C)

- On not time-critical sound effects, start the sound effect on lost events (Scenario A)

- On not time-critical sound effects, keep playing the sound effect on wrongly timed events (Scenario A)

- Stop sound effects for mis-predicted events (Scenario B). Either by clipping or fading out rather quickly.

The solution I came up with is based on the outline described by the Skullgirls developer.

The rough outline is:

-

In rollback state, keep information about the desired state of sound effects

-

After the ggrs bevy stage has finished, compare the desired state to the actual sound effects currently playing.

If the desired state doesn't match the actual state, stop, start, fade out or seek in sound effects as appropriate for the given sound effect.

Step 1, track desired state

In order to track desired state, I leverage bevy_ggrs as much as possible. The sound effects that should be playing at any given time are just regular Entitys with a Rollback component on them and some metadata about the actual sound effect.

#[derive(Component)]

pub struct RollbackSound {

/// the actual sound effect to play

pub clip: Handle<AudioSource>,

/// when the sound effect should have started playing

pub start_frame: usize,

/// differentiates several unique instances of the same sound playing at once. (we'll get back to this)

pub sub_key: usize,

}

impl RollbackSound {

pub fn key(&self) -> (Handle<AudioSource>, usize) {

(self.clip.clone(), self.sub_key)

}

}

#[derive(Bundle)]

pub struct RollbackSoundBundle {

pub sound: RollbackSound,

/// an id to make sure that the entity will be removed in case of rollbacks

pub rollback: Rollback,

}

So when I want to play a sound effect, all I do is spawn an entity representing the sound effect, i.e. in regular (rollback) gameplay systems:

if state.can_jump() && input.just_pressed(Action::Jump) {

let v0 = f32::sqrt(2. * *height * gravity);

vel.0.y += v0;

commands.spawn(RollbackSoundBundle {

sound: RollbackSound {

clip: jump.clone(),

start_frame: **frame,

sub_key: rollback.id(),

},

rollback: rip.next(),

});

}

That means that the rollback schedule doesn't actually start the sound playing, it just creates a representation of what sounds we would have liked to be playing.

Since these entities are tracked by rollback, it will only ever be one of them for a given event in the game. When we roll back, sound effects within the rollback interval will be despawned (and then potentially respawned) as appropriate. This means RollbackSound entities for incorrectly predicted sounds will only exist for a very short time; that is, until we receive the actual contradicting player input.

Step 2, compare desired state against actual state

Outside the rollback world, in the regular Update stage, I have a system that checks what sounds should have been playing and compares that to the sounds that are actually playing. If there are new RollbackSounds we haven't seen before, we start playing them:

/// The "Actual" state.

///

/// I'm using bevy_kira for sound, but this could probably work similarly with bevy_audio.

#[derive(Resource, Reflect, Default)]

struct PlaybackStates {

playing: HashMap<(Handle<AudioSource>, usize), Handle<AudioInstance>>,

}

fn sync_rollback_sounds(

mut current_state: ResMut<PlaybackStates>,

mut audio_instances: ResMut<Assets<AudioInstance>>,

desired_query: Query<&RollbackSound>,

audio: Res<Audio>,

frame: Res<FrameCount>,

) {

// remove any finished sound effects

current_state.playing.retain(|_, handle| {

!matches!(

audio_instances.state(handle),

PlaybackState::Stopped | PlaybackState::Stopping { .. }

)

});

let mut live = HashSet::new();

// start/update sound effects

for rollback_sound in desired_query.iter() {

let key = rollback_sound.key();

if current_state.playing.contains_key(&key) {

// already playing

// todo: compare frames and seek if time critical

} else {

let frames_late = **frame - rollback_sound.start_frame;

const MAX_SOUND_DELAY: usize = 10;

// ignore any sound effects that are *really* late

// todo: make configurable

if frames_late <= MAX_SOUND_DELAY {

if frames_late > 0 {

// todo: seek if time critical

info!(

"playing sound effect {} frames late",

**frame - rollback_sound.start_frame

);

}

let instance_handle = audio.play(rollback_sound.clip.clone()).handle();

current_state

.playing

.insert(key.to_owned(), instance_handle);

}

}

// we keep track of `RollbackSound`s still existing,

// so we can remove any sound effects not present later

live.insert(rollback_sound.key().to_owned());

}

// stop interrupted sound effects

for (_, instance_handle) in current_state

.playing

.drain_filter(|key, _| !live.contains(key))

{

if let Some(instance) = audio_instances.get_mut(&instance_handle) {

// todo: add config to use linear tweening, stop or keep playing as appropriate

// instance.stop(default()); // immediate

instance.stop(AudioTween::linear(Duration::from_millis(100)));

} else {

error!("Audio instance not found");

}

}

}

Now, I promised I'd explain the (sub-)keys... As you can see, we keep a hashmap of the currently playing sounds. The RollbackSound::key method is used to index this hashmap, and it's simply the combination of the the actual sound we wanted to play, and the mysterious "sub-key".

So why did I add the sub-key? It's there to handle the situations like two players jumping at the same time. In that case, we actually want two jump sounds to play at the same time. If I hadn't added the sub-key, and just used the sound effect as the key, the desired to actual comparison would think it was the same sound playing, one just being a mis-predicted version of the other. So essentially the two players would "fight" over who gets to have their jump sound played.

So that's why I added the sub_key. In this case, we can simply add the rollback handle of the entity as a sub_key and the sounds would be treated as independently by the sound system. However, we'd still correctly handle incorrectly timed sound effects for each player properly.

As you can see, my code has some todos in it: I didn't implement seeking on time-critical sound effects, and I didn't make fading out configurable. The reason is that I don't actually have any time-critical sound effects yet, and I'm trying not to get ahead of myself by implementing too many features I don't need (YAGNI). I'm confident that I can easily get there, and that's enough.

The last pieces of the puzzle is a simple maintenance system to despawn sounds in the rollback world when they are supposed to be finished playing.

fn remove_finished_sounds(

frame: Res<FrameCount>,

query: Query<(Entity, &RollbackSound)>,

mut commands: Commands,

audio_sources: Res<Assets<AudioSource>>,

) {

for (entity, rollback_sound) in query.iter() {

// perf: cache frames_to_play instead of checking audio_sources every frame?

if let Some(audio_source) = audio_sources.get(&rollback_sound.clip) {

let frames_played = **frame - rollback_sound.start_frame;

let seconds_to_play = audio_source.sound.duration().as_secs_f64();

let frames_to_play = (seconds_to_play * ROLLBACK_FPS as f64) as usize;

if frames_played >= frames_to_play {

commands.entity(entity).despawn();

}

}

}

}

So this piece of code feels very general and could be added to any game needing rollback and sounds. At the very least by games using bevy_ggrs and bevy_kira_audio. So on one hand, I kind of want to create a crate out of it, but I'm also having a bit of trouble wrapping my head around how to do "plug-ins" for the bevy_ggrs rollback schedule (since these systems need to exist both in the regular bevy schedule and in the rollback schedule).

It kind of feels like we would need a couple of more traits and functions in bevy_ggrs, however I strongly suspect the Bevy stageless RFC is going to turn any solution we come up with upside down, so instead I'll hold back until Bevy 0.10, and instead provide this handy devlog for anyone else wanting sound in their bevy_ggrs game.

Looping sound effects are simpler

As I was about to add a sound effect to the jetpack thruster, I discovered that looping sound effects actually don't need all this complex logic. For these kinds of sounds effects, it's enough to simply fade the sound in or out depending on the current state; in this case whether the jetpack is on or not.

I was able to do all of this without really changing any of the rollback systems. I have a FadedLoopedSound component:

#[derive(Component, Reflect)]

#[reflect(Component)]

pub struct FadedLoopSound {

/// The actual sound playing, if any

pub audio_instance: Option<Handle<AudioInstance>>,

/// The sound to play

pub clip: Handle<AudioSource>,

/// number of seconds to fade in

pub fade_in: f32,

/// number of seconds to fade out

pub fade_out: f32,

/// whether the sound effect should be playing or not

pub should_play: bool,

}

And in a regular update system I set its should_play field depending on the current state:

sound.should_play = character_state == &CharacterState::Fly && dir != Vec2::ZERO;

And finally, I have system that takes care of the actual fading in and out:

fn update_looped_sounds(

mut sounds: Query<&mut FadedLoopSound>,

mut audio_instances: ResMut<Assets<AudioInstance>>,

audio: Res<Audio>,

) {

for mut sound in sounds.iter_mut() {

if sound.should_play {

if sound.audio_instance.is_none() {

sound.audio_instance = Some(

audio

.play(sound.clip.clone())

.looped()

.linear_fade_in(Duration::from_secs_f32(sound.fade_in))

.handle(),

);

}

} else if let Some(instance_handle) = sound.audio_instance.take() {

if let Some(instance) = audio_instances.get_mut(&instance_handle) {

instance.stop(AudioTween::linear(Duration::from_secs_f32(sound.fade_out)));

}

};

}

}

Maybe it's kind of weird to have two solutions that do very similar things... however, I think I'd rather have two really simple systems, than try to fit everything into one complex with lots of edge cases. We'll see as the game evolves.

Actually adding some sounds

So after setting all this up, I finally added some sounds to the game. This is what I've got so far:

Maybe the player land sound is a little too bass-ey, and the jump sound slightly too cartoony, and the engine sound a bit too far in the "old boat" direction... I don't really know yet.

As with everything else in my game, I think this serves as a good first pass. I want to stay loose and experiment. Later, when I get an overall feel for what the game is first, I can get back and make it more cohesive and polished.

Putting it to the test

I wanted to also make sure that sounds were feeling as good as they could in the case of extreme rollbacks.

In order to test this, I needed to provoke some serious network latency.

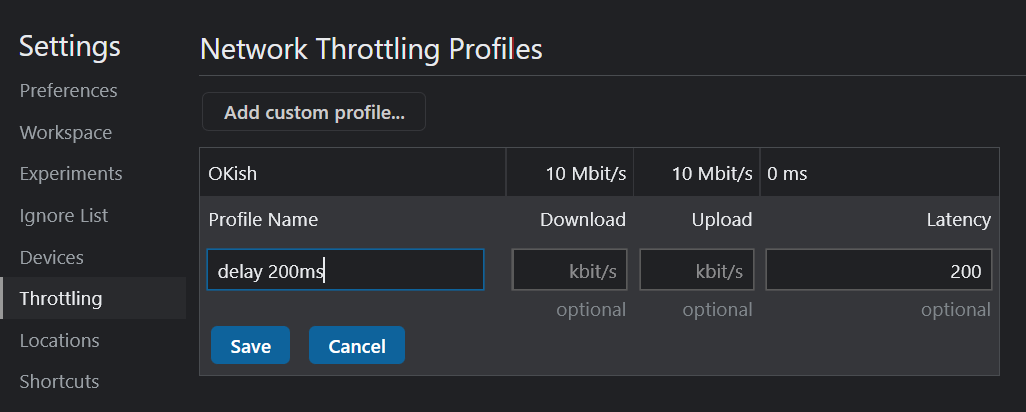

First, I tried using Chrome's built-in throttling tool...

However, it turns out it doesn't actually affect WebRTC, only regular http requests.

In Firefox, it turns out it gets some slight performance problems as soon as I have two windows visible, which leads to a lot of crackling noise; See this issue

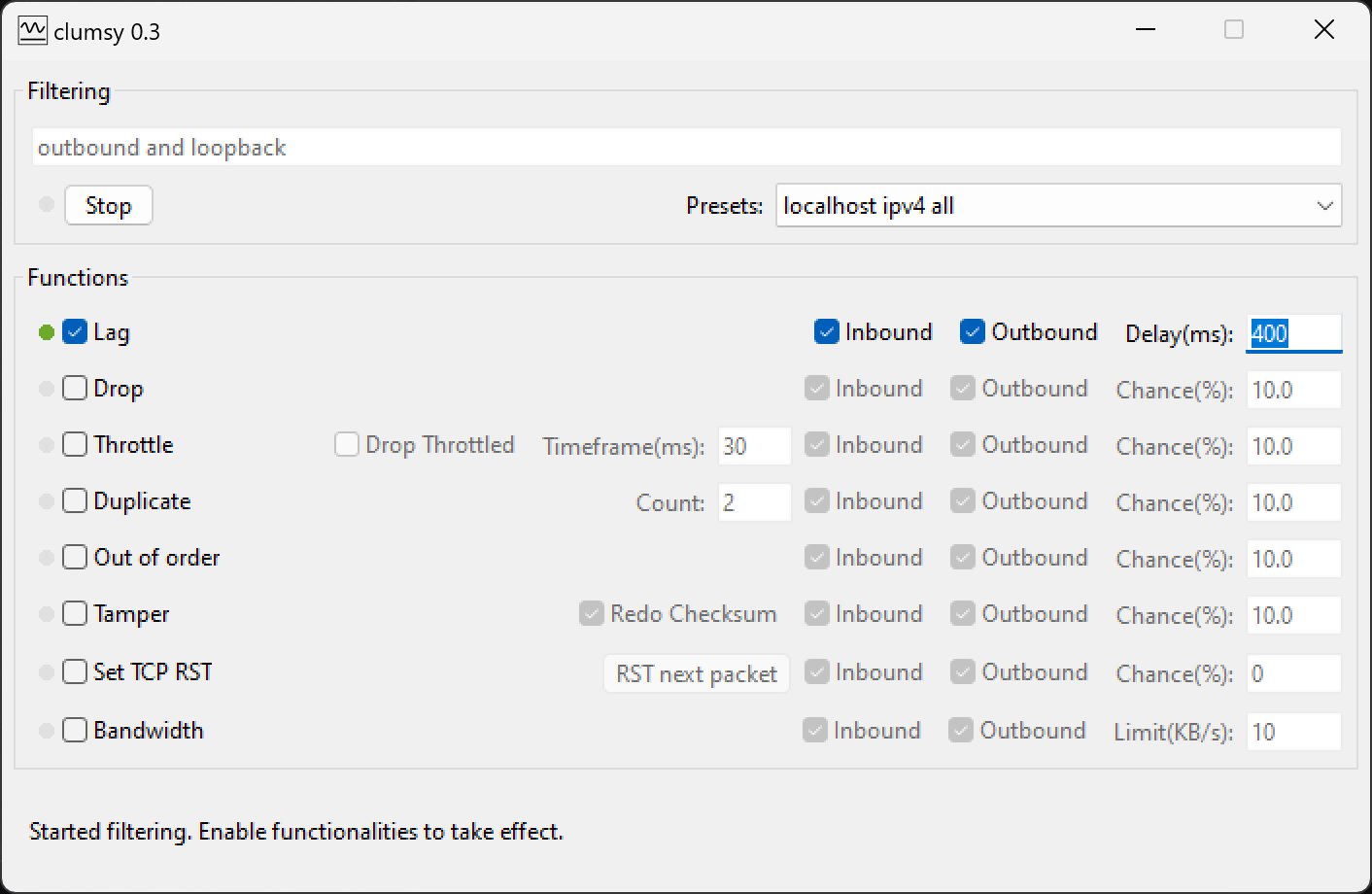

That left me looking for a native solution. Since I'm currently running Windows, I ended up using using Clumsy, which was ridiculously easy to set up:

This was actually too much of a delay for my current GGRS settings, it got stuck trying to synchronize and the session never started. So I had to bump the prediction window and max frame behind parameters of GGRS:

let mut session_builder = SessionBuilder::<GGRSConfig>::new()

.with_max_prediction_window(40)

.with_max_frames_behind(42)

.unwrap()

This let me start a session with a ridiculous ping of 800...

The left window is muted and is the one receiving input, while the other one is desperately trying to keep up with a ping of over 800ms. As you'd expect the snapping is pretty horrible, but the sound effects still manage to convey some meaning:

- We get a jump sound, even if it's played way too late

- When we incorrectly assume the other player exits the ship, we start playing the jetpack sound, but we quickly fade it out when we realize they did not.

- The landing sound plays exactly on time, and correctly handles the case when we have to roll back past the landing to re-simulate horizontal movement (and landing in a different spot).

Of course I'll never want to support such high latencies, but it's nice to be able go to the extremes when testing subtle bugs.

Updates

That's it for today, I made some other progress on my game, but I thought I'd keep the scope narrow for this one :)

For future updates, join the discord server or follow me on Mastodon